The Coming Disruption by AI Native Companies

Legacy companies, creators, and your garage in focus

AI native companies are forecasted to become an actual thing according to pieces found in WSJ, Microsoft, and Cognizant. Here’s an excerpt from Duncan Roberts, a researcher at Cognizant:

While AI-native businesses as we’ve defined them don’t exist yet, they soon will. These AI-first upstarts will insert AI (and increasingly generative AI) into the fabric of everything they do. And because their whole mindset is centered on AI-driven capabilities, the technology will drive how they think and do things.

Unburdened by the constraints of legacy systems and entrenched approaches, AI-native businesses will see AI not as a tool to be bolted on, but as the fundamental building block of their operations.

This pure AI mindset will allow them to capitalize on generative AI’s strengths, from the tailored experiences customers crave, to blazingly fast internal processes, to business models that, to a traditional business’s eye, appear inside-out and upside-down.

Like the digital-native businesses before them (Uber, Netflix, Venmo), AI-native businesses will also change how consumers behave.

If their forecasts come to fruition, a clash with traditional companies is coming.

Traditional companies incorporating AI have advantages such as valuable internal data as well as relationships with customers and regulators, though face challenges such as an over-reliance on legacy systems, department silos, and office politics.

AI-native companies face significant challenges, including legal liability risks, regulatory hurdles, and potentially insufficient publicly available training data. However, they also hold advantages that position them to leapfrog any traditional businesses struggling to adapt. These advantages include lean founding and operating teams, high risk tolerance as disruptors, and low barriers to entry—you no longer need to know how to code to start a software company. Every garage is now a potential incubator, not just those belonging to computer science graduates.

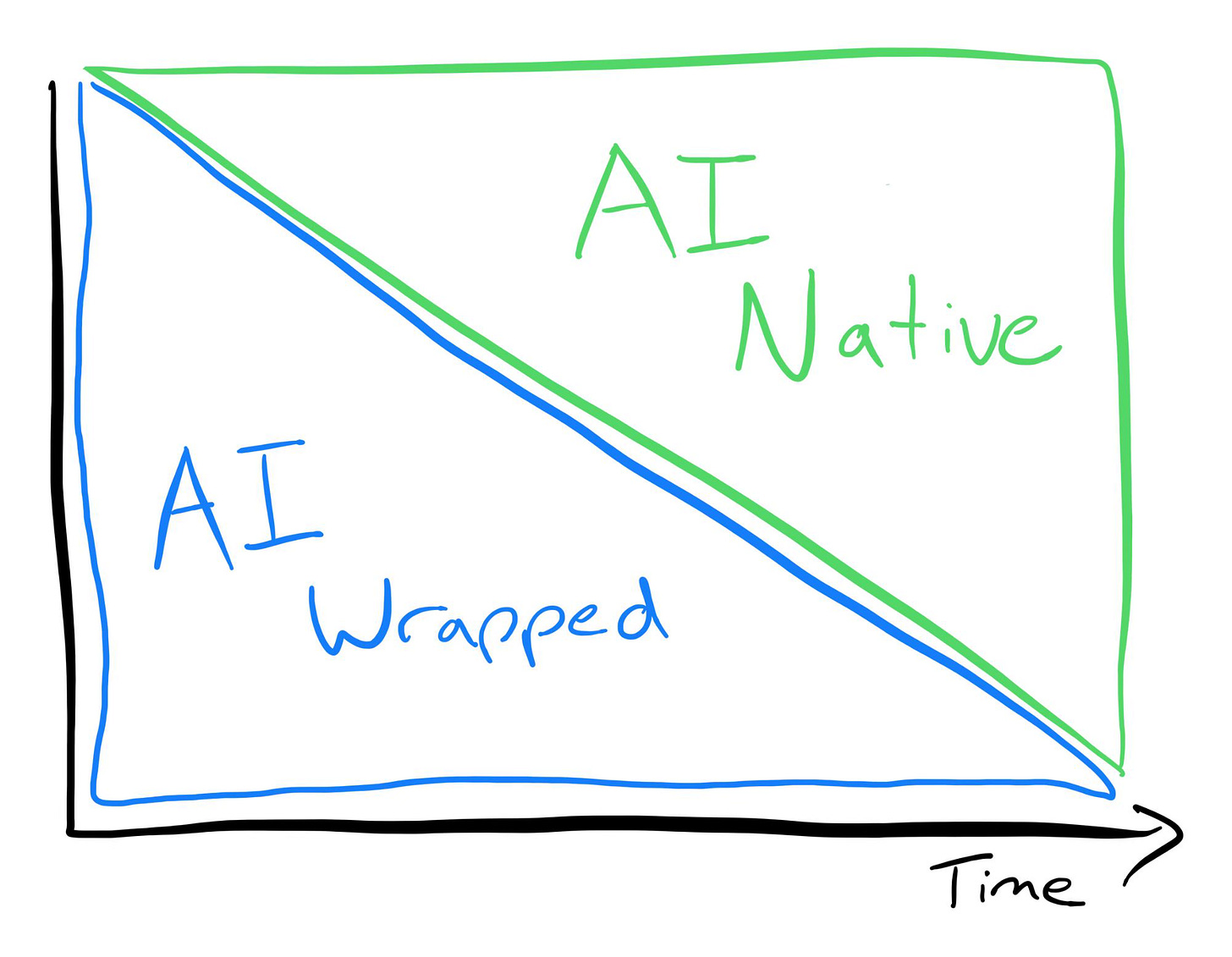

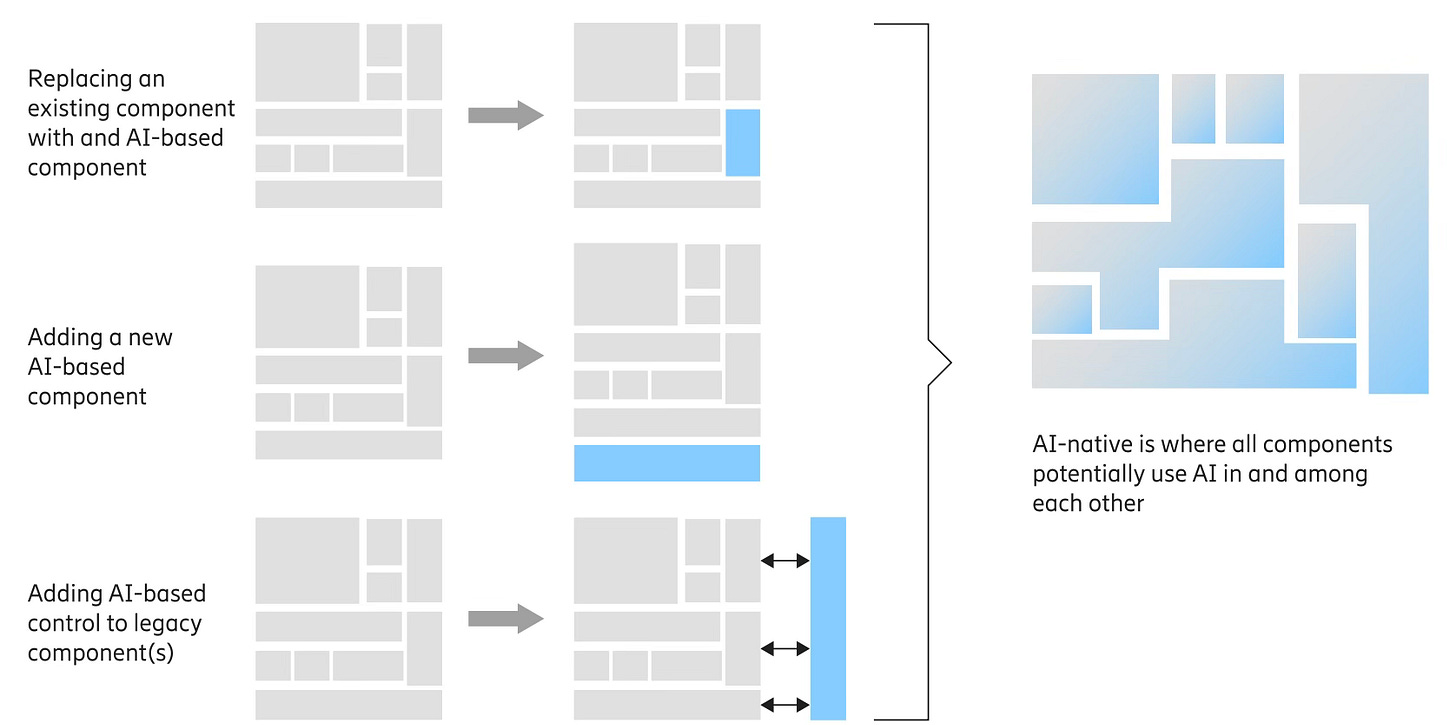

The initial clash will probably be centered around cost and “human vs AI” error rates. Creators will first direct foundation models like ChatGPT to generate traditional software at a fraction of its original cost because these models can translate English into code as easily as they can translate into German. As AI capabilities advance, creators will wrap AI-generated traditional software “guardrails” around models to reduce errors—models currently have a little fabrication problem when they don’t know the answer to something. Eventually, model error rates might drop sufficiently to remove the guardrails and we will just deal directly with AI in its various forms at that point.

Some early real-world examples of AI wrapped applications include the following:

AlphaSense, a market intelligence and search platform that helps businesses analyze financial documents, earnings calls, news, and research reports.

Modis AI, a staffing and workforce platform that helps businesses match talent to jobs, optimize hiring processes, and predict workforce trends.

Priori, a personal growth app that transforms priorities into daily actions, personalizes goals, and gamifies progress to boost motivation.

To tether two of those examples to the real world: a friend of mine uses AlphaSense at work; the company is valued at $4 billion according to Pitchbook. My brother, Will, created Priori—it’s currently in family beta testing, and we're looking forward to the full launch this spring!

The application layer is primed for creators to build AI-native companies. The model and middleware layers are ready to support. The garage door is open—maybe your brother is in there too.

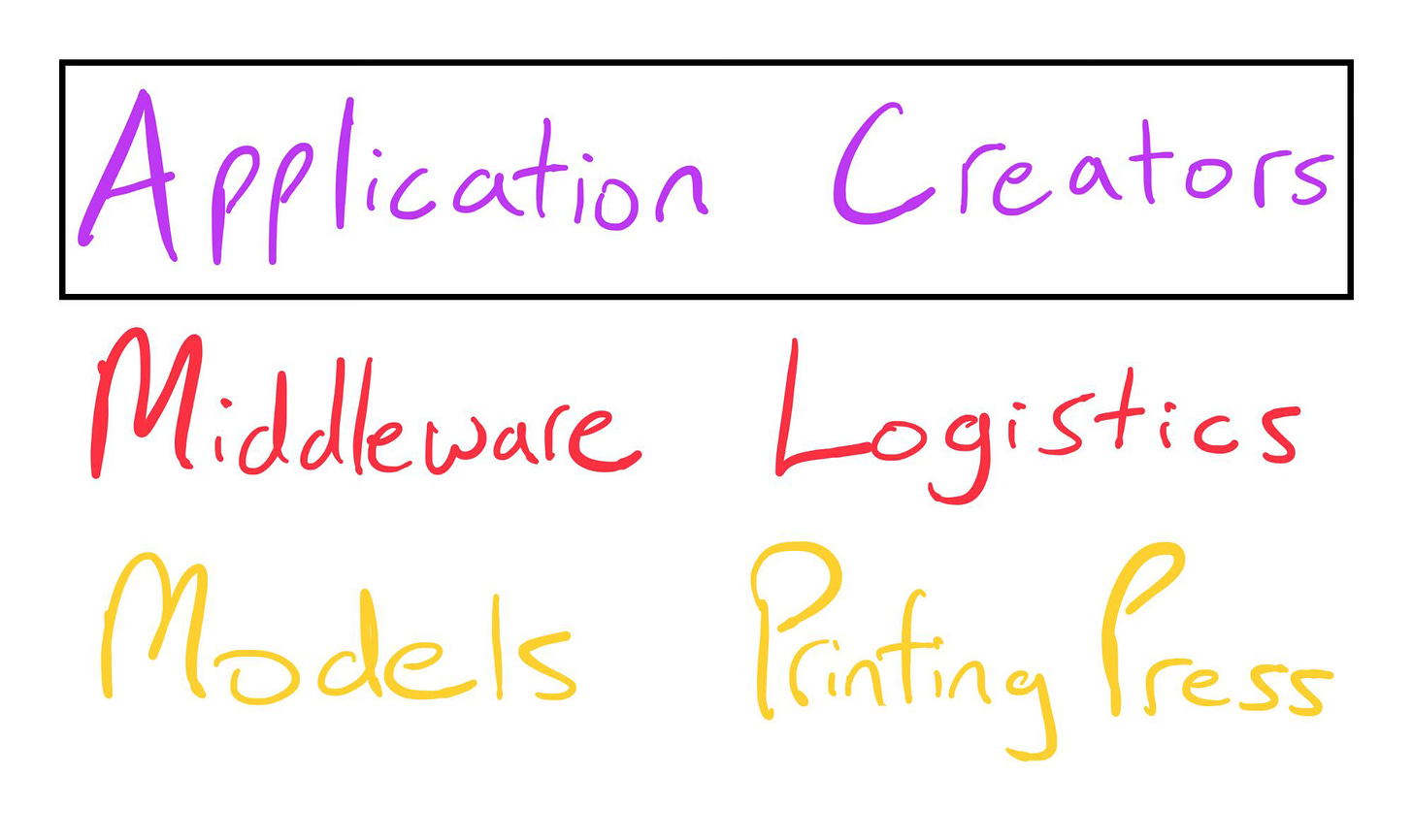

To wrap up this series on the top half of the AI stack:

As mentioned in the first article of this series “How AI Is Creating New Avenues to Demonstrate Intelligence”, the creation of foundation models such as ChatGPT is often compared to the creation of the printing press. The overwhelming majority of the value of that invention accrued to content creators (authors, lawyers, etc).

Today, the content of these creators can be found in the “training data” layer of AI stack, below the models layer. Their content surfaces when in model outputs and the original creator goes uncompensated. Making matters worse, content creators are no longer receiving the same kind of web traffic they received previously. The incentive for them to continue creating is dropping. Hold that thought.

The second article in this series “Pinballs & Pathways: Overlooked AI Logistics Networks” discussed essentially “what logistics infrastructure can do for models and applications”, though this middleware infrastructure can help original content creators too. Some middleware players are devising ways to block and toll “training data harvesting bots” that crawl the websites of creators. It may someday be possible to pay content creators not just on the training data harvesting end but also on the application end when model outputs reference specific creators’ content—kind of like the Spotify business model where artists get a piece of the listener subscription revenue based on the amount of listens their music garners.

It is such an interesting time to follow developments in this space, every day there is something new! I will remain glued to my favorite tech news aggregator, Techmeme.

Thanks for reading!

Disclosure: This article is for informational purposes only and is not investment advice.