Pinballs & Pathways: Overlooked AI Logistics Networks

How AI logistics can enable new applications in the way Shopify enabled online business

While the printing press was ultimately commoditized, the supporting infrastructure built around it has not been commoditized. Today, logistics companies such as Amazon, DoorDash, and Manna are opening up new possibilities such as delivery within hours or even minutes in the case of Manna’s ice cream delivery flights. Technological innovations enable continuous improvement in last-mile delivery speed, reliability, and security. New applications native to these innovations, such as local ghost kitchens or global e-commerce titan Shein, are unlocking significant value for customers.

The Logistics-Middleware Connection

In line with what happened to the printing press, a level of commoditization of AI foundation models already appears to be occurring. While it is interesting to follow the models’ technological “bake off”, where a new model lands on top seemingly every week, I find that the layers sitting on top of foundation models—middleware and applications— are overlooked and more interesting, respectively.

The middleware players of the AI stack offer a suite of complementary logistics services such as speedy, secure content distribution and, now, AI inference capabilities that allow models to run closer to users, reducing latency and improving performance. Smaller companies such as Cloudflare and Fastly compete with large players such as AWS and Microsoft Azure in this layer.

Daniel Kahneman & the Inference Pinball Machine

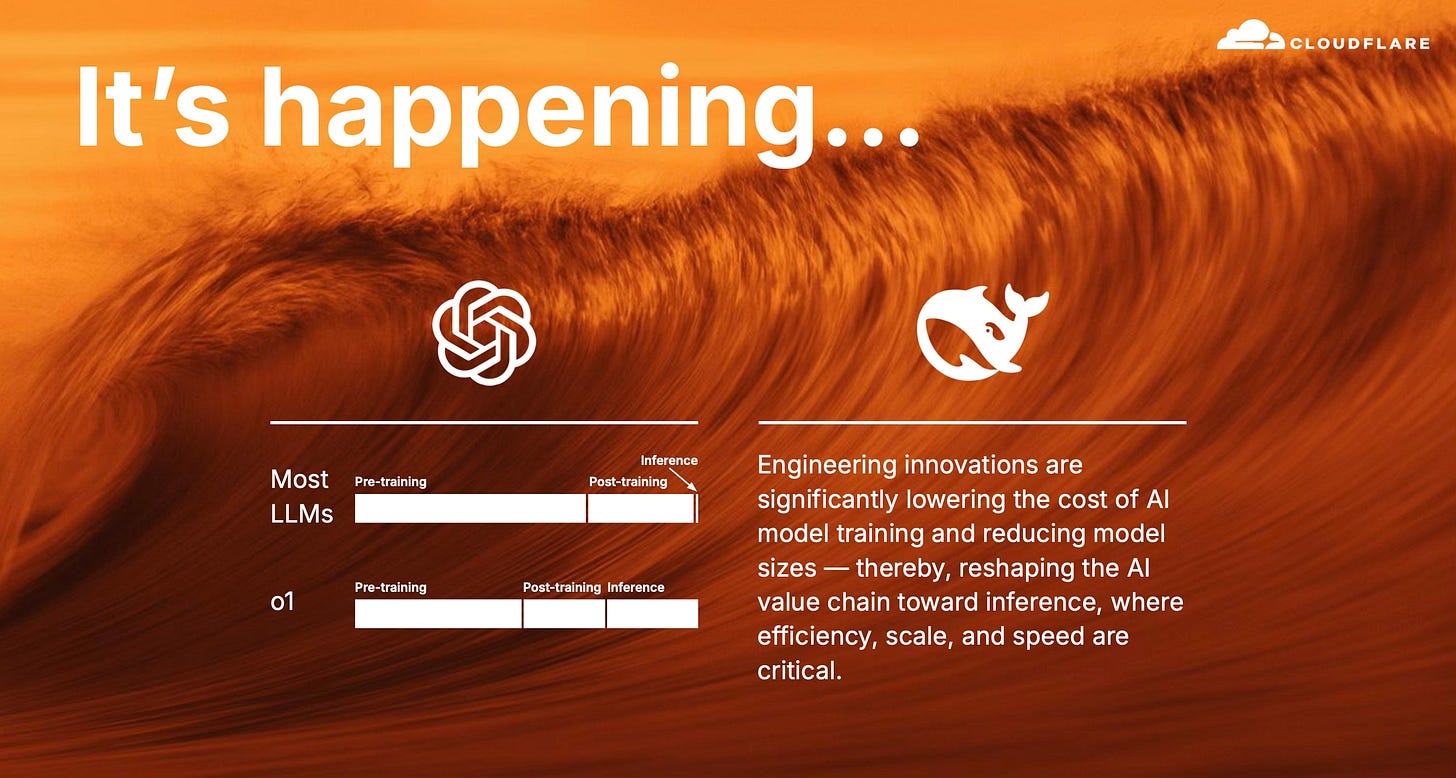

The recent shift towards inference compute is the latest signal of middleware platforms’ crucial enablement role for AI applications.

To provide some context, foundation models are pre-trained using expensive GPUs on massive datasets, resulting in a distilled file that encapsulates the learned knowledge. Post-training involves testing and refining the model, which also requires significant GPU training time and power expense. Both of these steps are typically performed in large, centralized data centers equipped with GPUs.

Inference, on the other hand, is better performed in smaller, decentralized data centers.

Unlike training, inference usage of GPUs represents the actual use of the model in the world where a user of the model sends in a prompt, the prompt bounces off of the model (think racquetball), and then the user receives a response. The bounce represents a single-shot, short burst of creative thinking which requires only a small amount of GPU inference time and power consumption. This is akin to the System 1 thinking described in Daniel Kahneman’s Thinking, Fast and Slow.

These single-shot models are great, though foundation model creators have realized that they can improve the model’s reasoning skills by linking several model instances together to create a “chain-of-thoughts” model. When a user sends in a prompt to a chain-of-thought model such as ChatGPT o1, the prompt bounces around a few times, which requires more GPU inference time (think pinball machine). This is akin to Daniel Kahneman’s system 2 thinking.

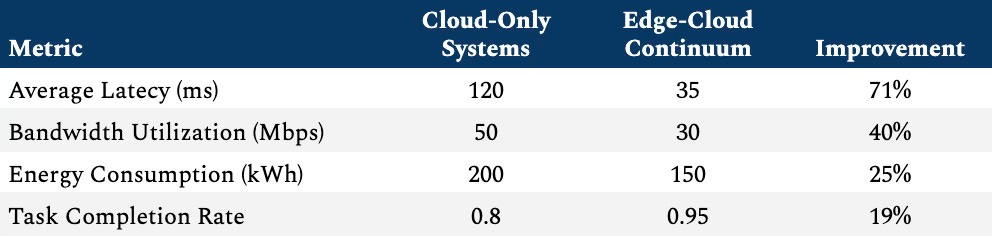

Centralized inference capabilities are reliant on distant cloud servers and, thus, struggle with latency and lacks flexibility for real-time, user-specific outputs. Decentralizing inference slashes delays and enable localized model tuning, such as adapting AI to regional languages or preferences. Existing middleware CDN providers are particularly well-positioned to capitalize on this trend, as their existing globally distributed network of small-scale data centers can seamlessly integrate GPU capabilities on the edge, within the last mile of users, furthering their platform's overall value.

The move toward running AI closer to users matters, but an even deeper advantage belongs to the networks that handle a large and diverse share of global internet traffic. Netflix, for example, carries roughly five to ten percent of global traffic depending on how you measure it, though almost all of that is streaming video. Cloudflare, on the other hand, carries a meaningful slice of traffic across the full mess of human and machine behavior. It can watch real time patterns in how people and autonomous agents (called bots when malicious) interact online, and that kind of visibility becomes a source of trust once agents start acting on our behalf.

Developers on Cloudflare can also build inside one unified environment rather than glue together a long list of providers. This simplicity, paired with the other advantages already discussed, may matter in a future where advances in AI slowly push large parts of the current SaaS layer toward obsolescence. If that happens, value may drift down ‘the stack’ to a more durable position in infrastructure, a layer where Cloudflare is already well positioned.

Past platforms such as Shopify and Meta Platforms birthed entire waves of new businesses by lowering the barrier to creation. They created their own customer bases, as Ben Thompson once said of Meta. AI logistics platforms will have the chance to play that same role for the coming generation of AI native applications.

Disclosure: This article is for informational purposes only and is not investment advice.

Thanks for reading—this article is the second in a series covering the top half of the AI stack. The first, “How AI Is Creating New Avenues to Demonstrate Intelligence”, can be found here. The third and final, “The Coming Disruption by AI Native Companies”, can be found here.